Fixing memory leaks in Tomcat

Paweł Ostrowski

Business Software Developer

Mateusz Maciejewski

DevOps Specialist

- 19 May 2018

- Knowledge base

- 5 min

Encountering the issue

One of our developer teams is building an application using microservices architecture. One of the implications of this decision is that we have multiple applications that need to be deployed to the application server of our choice. We are using Tomcat 8, which is running on CentOS 7 and we have around 20 applications deployed to a server at the same time. Once upon a time, when we were redeploying our applications, one of our Jenkins jobs failed with the following stack trace:

Deploying ERROR: Build step failed with exception org.codehaus.cargo.container.ContainerException: Failed to deploy [C:\.jenkins\jenkins_home\jobs\Apsello_Timesheet_DeployDev_Delta_Deploy\workspace\TimesheetServer\build\libs\TimesheetBE##168_275052bbda394863d5ff75dedb8991b2f227d334.war] Caused by: java.net.ConnectException: Connection refused: connect at java.net.DualStackPlainSocketImpl.connect0(Native Method) at java.net.DualStackPlainSocketImpl.socketConnect(Unknown Source) at java.net.AbstractPlainSocketImpl.doConnect(Unknown Source) at java.net.AbstractPlainSocketImpl.connectToAddress(Unknown Source) at java.net.AbstractPlainSocketImpl.connect(Unknown Source) at java.net.PlainSocketImpl.connect(Unknown Source) at java.net.SocksSocketImpl.connect(Unknown Source) at java.net.Socket.connect(Unknown Source) at java.net.Socket.connect(Unknown Source) at sun.net.NetworkClient.doConnect(Unknown Source) at sun.net.www.http.HttpClient.openServer(Unknown Source) at sun.net.www.http.HttpClient.openServer(Unknown Source) at sun.net.www.http.HttpClient.parseHTTPHeader(Unknown Source) at sun.net.www.http.HttpClient.parseHTTP(Unknown Source) at sun.net.www.protocol.http.HttpURLConnection.getInputStream0(Unknown Source) at sun.net.www.protocol.http.HttpURLConnection.getInputStream(Unknown Source) at org.codehaus.cargo.container.tomcat.internal.TomcatManager.invoke(TomcatManager.java:544)

We have begun our investigation at the source – looking at tomcat’s log files. In the catalina.out we have found the following:

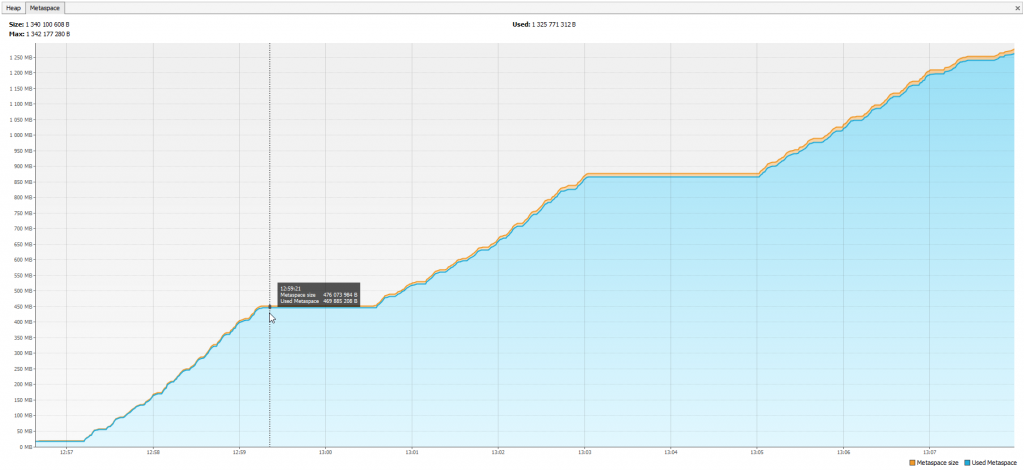

Our metaspace was running out. Tomcat’s MaxMetaspaceSize was set to well over 1.25GB, and our applications took around 450 MB when deployed, so it was a red flag. We’ve added following parameters to CATALINA_OPTS in both tomcat’s /bin/setenv.sh to enable remote JMX connection and connect VisualVM to see how our metaspace is behaving:

-Dcom.sun.management.jmxremote.authenticate=false -Dcom.sun.management.jmxremote.port=9999 -Dcom.sun.management.jmxremote.ssl=false -Djava.rmi.server.hostname=localhost

Once we’ve started monitoring our metaspace, we’ve noticed that it isn’t released after redeploying our apps. Each redeploy added around 450MB to the used metaspace, with third one going over our set limit of 1.25 GB of metaspace, effectively stopping our tomcat.

Upon examining our tomcat’s logs, we’ve found the following message:

Mar 30, 2017 2:59:30 PM org.apache.catalina.loader.WebappClassLoaderBase clearReferencesThreads WARNING: The web application [BackendApp] appears to have started a thread named [Resource Destroyer in BasicResourcePool.close()] but has failed to stop it. This is very likely to create a memory leak. Stack trace of thread: java.lang.System.currentTimeMillis(Native Method) com.mysql.jdbc.MysqlIO.send(MysqlIO.java:3736) com.mysql.jdbc.MysqlIO.quit(MysqlIO.java:2254) com.mysql.jdbc.ConnectionImpl.realClose(ConnectionImpl.java:4267) com.mysql.jdbc.ConnectionImpl.close(ConnectionImpl.java:1531) com.mchange.v2.c3p0.impl.NewPooledConnection.close(NewPooledConnection.java:642) com.mchange.v2.c3p0.impl.NewPooledConnection.closeMaybeCheckedOut(NewPooledConnection.java:255) com.mchange.v2.c3p0.impl.C3P0PooledConnectionPool$1PooledConnectionResourcePoolManager.destroyResource(C3P0PooledConnectionPool.java:622) com.mchange.v2.resourcepool.BasicResourcePool$1DestroyResourceTask.run(BasicResourcePool.java:1076) com.mchange.v2.resourcepool.BasicResourcePool.destroyResource(BasicResourcePool.java:1101) com.mchange.v2.resourcepool.BasicResourcePool.destroyResource(BasicResourcePool.java:1062) com.mchange.v2.resourcepool.BasicResourcePool.access$100(BasicResourcePool.java:44) com.mchange.v2.resourcepool.BasicResourcePool$5.run(BasicResourcePool.java:1316)

Which indicated that our problem might originate from a database threadpool manager that we are using – the c3p0 library. To confirm our suspicions, we have removed c3p0 from our project and opted to use a basic DataSource instead. In a test environment with a Windows OS, it worked (our applications freed up metaspace).

We thought it might be related to the improper closure of polled connections by hibernating when closing session factory. To alleviate that, we have found confirmed solutions from stack overflow (http://stackoverflow.com/a/17702965). However, after implementing it, noting has changed (for more details see: https://hibernate.atlassian.net/browse/HHH-7364).

As the library itself is still in the pre-production stage, we have determined that a possible course of action would be to update it to the newest available version and so we did. We have changed the version in our build.gradle from

org.hibernate:hibernate-c3p0:'4.3.11.Final'

to

org.hibernate:hibernate-c3p0:'5.2.9.Final'

Again, in our testing environment, it worked. However, it didn’t work on our development environments which run on CentOS 7. To determine whether our OS played a part in this, we have decided to create a Docker container to ignore as many factors as we could.

We have linked a Github repo which contains the Dockerfile.

The container consists of Ubuntu OS with Tomcat and Oracle JDK 8. When run on this container, the majority of our applications (except for two) did not free up the metaspace. We have begun investigating the differences between these two and the remainder of our applications. After examining Gradle dependencies of the projects, we’ve narrowed the problem down to issue with scopes of dependencies – applications which undeployed correctly had MySQL connector as a compile dependency, while the rest had it under providedRuntime. For some reason, our Tomcat misbehaved when database connection was shared between multiple applications. Our fix to that was to change the scope to compile for the remaining applications. Thanks to that, a majority of our application started to free up the metaspace. Not all of them though. As for the remaining applications that resisted our best efforts, we have found the following message in our logs:

Mar 30, 2017 12:38:30 PM org.apache.catalina.loader.WebappClassLoaderBase clearReferencesThreads WARNING: The web application [BackendApp] appears to have started a thread named [Resource Destroyer in BasicResourcePool.close()] but has failed to stop it. This is very likely to create a memory leak. Stack trace of thread: java.lang.System.currentTimeMillis(Native Method) ch.qos.logback.classic.spi.LoggingEvent.<init>(LoggingEvent.java:127 ch.qos.logback.classic.Logger.buildLoggingEventAndAppend(Logger.java:440) ch.qos.logback.classic.Logger.filterAndLog_0_Or3Plus(Logger.java:396) ch.qos.logback.classic.Logger.debug(Logger.java:503) com.mchange.v2.log.slf4j.Slf4jMLog$Slf4jMLogger$DebugLogger.log(Slf4jMLog.java:204) com.mchange.v2.log.slf4j.Slf4jMLog$Slf4jMLogger.log(Slf4jMLog.java:247) com.mchange.v2.resourcepool.BasicResourcePool$1DestroyResourceTask.run(BasicResourcePool.java:1074) com.mchange.v2.resourcepool.BasicResourcePool.destroyResource(BasicResourcePool.java:1101) com.mchange.v2.resourcepool.BasicResourcePool.destroyResource(BasicResourcePool.java:1062) com.mchange.v2.resourcepool.BasicResourcePool.access$100(BasicResourcePool.java:44) com.mchange.v2.resourcepool.BasicResourcePool$5.run(BasicResourcePool.java:1316)

It gave us an additional clue – our logstash appender has not been closed properly by some of the applications. After looking at the core of the issue, we have concluded that the best solution would be to write a customized ContextDestroyer class which would be invoked by Tomcat when an application is undeployed and shutdown the remaining threads manually. We have added the following code to our applications:

@Component

public class LogbackContextFinalizer implements ServletContextListener {

private static final Logger LOGGER = LoggerFactory.getLogger(LogbackContextFinalizer.class);

@Override

public void contextInitialized(ServletContextEvent sce) {

LOGGER.info(“Executor Killer start”);

}

@Override

public void contextDestroyed(ServletContextEvent sce) {

LOGGER.info(“Shutting down Logback context ‘” + “‘ for “ + contextRootFor(sce));

LoggerContext loggerContext = (LoggerContext) LoggerFactory.getILoggerFactory();

loggerContext.stop();

}

private String contextRootFor(ServletContextEvent event) {

return event.getServletContext().getContextPath();

}

}

As a final obstacle, our Frontend application refused to undeploy after all of our fixes. We have determined that it was due to the sessions being held by the application. After forcefully shutting down all the sessions using the tomcat manager, the application was undeployed. We opted for a compromise in this case, as a legitimate solution would necessitate setting our session timeout below acceptable values. After undeploying, our frontend application will be active in the background until all the sessions timeout and will be undeployed on a subsequent deploy of the application.

It was not our first approach to this problem, but in our previous attempts, we were looking for a singular cause of our problems in all applications. It is a good idea to keep in mind that instead of a single cause you may be facing a multitude of issues. So it is always a good practice to exhaustively research your options before abandoning any approach – if we were to abandon one of our fixes on the way for ‘not fixing our problem’, other fixes would not have helped either. So as a final piece of advice, do not give up.

Do you need support in creating a web application?

- On 19/05/2017

0 Comments