MACHINE LEARNING IN PRACTICE – QUORA COMPETITION

Internal Project is an initiative that involves the implementation of projects funded additionally. As part of the internal program, the technological and development ideas of Apollogic employees are supported. The project of our Big Data team was entitled "Quora Competition".

The initiative assumed participating in Data Science competition; we conducted it in a three-person Big Data team consisting of:

Adam Maciaszek

Arkadiusz Rusin

Mikołaj Kęsy

The first part of the article presented:

- general information about the subject matter of the competition

- the course of competition

- our results

This time, we will discuss one of the technical issues which we had the opportunity to deal with.

Machine Learning

Computers, as calculating machines, have optimized the data analysis process very effectively, thus replacing a traditional piece of paper and a pen. Nowadays, the growth of information and the ability to store huge volumes of data have put the machines in the first place when it comes to exploring hundreds petabytes of information.

Today’s data storage process is characterized by one of the biggest growth rates, and the forecasts put forward by experts indicate that this process will continue to grow and that the total size of electronic data stored in the world in 2020 will exceed 44ZB (in 2004 it was only 4.4ZB source).

In this dynamic increase in various and gigantic data volumes, one of the major problems engineers have to deal with is the way of storing and managing this data.

On the other hand, expert opinions indicate that in the future, data will be one of the most valuable resources for humans. If we think of electronic data as another precious resource, such as gold, then we think of hard-working miners looking for gold in petagrams of useless land. This directs us to the idea of extracting valuable information from petabytes of worthless data stored on various memory disks.

After merging two worlds of experts in the field of data mining and their management, a new, interdisciplinary field emerges, called Machine Learning. It is dealt with by professionals in the fields of Data Science and Big Data.

Dynamic growth of data produced is a consequence of the rapidly growing trend imposed on us by the rapidly developing IoT market (the Internet of Things) – machines surrounding us generate enormous data volumes, but the increase in their production is also a consequence of human activity.

It is precisely humans who generate various types of information, such as text, graphic and video content, to which the Internet growth made a special contribution thanks to the emergence of social networking sites.

Nowadays, we are no longer able to process the material generated in finite time by ourselves, so we need powerful calculating machines to do it. Only these machines are able to cover the scale of this task.

Unfortunately, modern computers are only able to use numeric data mostly, performing a variety of operations on them. When dealing with text data, it is necessary to simulate the distinction between individual words in the lexical layer or to build groups of words with a high level of unambiguous relation.

Vector Space Model

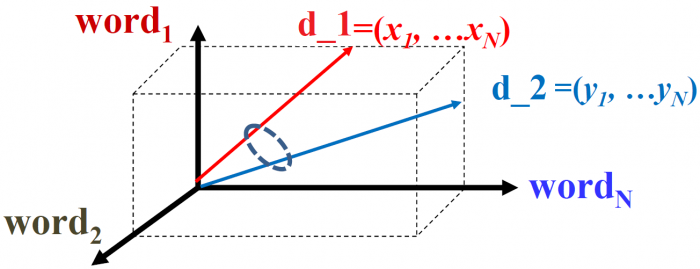

The VSM (Vector Space Model) is best suited for the above problem. It allows transforming the raw text to its vector representation. Every “document”, depending on the level of granulation we are dealing with in our problem, is represented as an N-dimensional vector, where N is the number of all terms (commonly called “keywords”), in the analyzed set. It is worth stopping at this point, and clarify the terms “document” and “keywords”.

Depending on the complexity of the problem that we need to deal with in our analysis, we can specify various levels of granulation in which we will build our vector:

- Word

- Sentence

- Document (set of sentences)

- Collection (set of documents)

In text analysis we can also distinguish many different units which are individual levels of the lexical representation of the text:

- Characters:

- n-gram “clusters” of letters

- sequences of letters

- Words:

- terms, or normalized words, which are the result of such methods as stemming (extracting the root of a word by removing a prefix or a postfix, it is defined by a set of various rules that lead to the automatic extraction of the word's root). Another method is called lemmatization (obtaining fundamental word for a given part of speech). Terms clearly represent a given word class.

- “stop-words” represent the most common words in texts, such as "the", "a". These words often do not carry any valuable meaning

- Sentences:

- a set of words that creates semantic and syntactic meaning

- - n-gram sets of words

- Parts of speech

It is worth to keep the above list of lexical levels in mind because this approach to text analysis allows creating a variety of vector models, each of which can provide different, but equally interesting answer to our problem.

For simplification, the further part of the article uses phrases “document d” to refer to the level representing the vector and “w words” reflecting the vector elements. Therefore, the use of the above naming allows the following description of our model: “Every document d_i (i-1,…,M where M is the number of analysed documents) is represented by the vector of N words w_j (j=1,…,N where N is the number of unique words).”

The figure above presents two separate documents represented by two vectors d_1 and d_2. Each of these vectors consists of 3 elements, which are identified by 3 unique words in our analysis.

Weight of words

Another issue is determining the weight of individual words. When analyzing text sets, we need to ask ourselves: “Which shared words are important for the similarity of texts?” This is a fundamental question that every analyst should be asking. We can distinguish several ways of determining word weights:

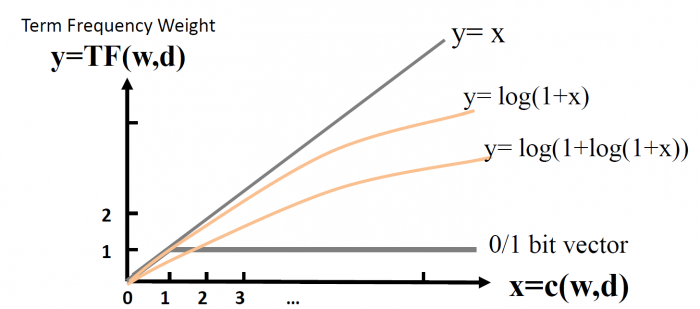

- TF weight (term frequency), in other words, the frequency of words occurrence. In this case, we are interested in finding words that often occur in the sentence, and thus may be crucial to us. There are many ways to calculate TF. For example, you can use bitmaps that include only the occurrence of a given word in the text. Therefore, every word has the same weight as long as it appears in the text (1 – when the word occurs in a sentence, 0 – when the word is missing from the sentence). We can also distinguish the weights by the number of occurrences of a given word in the sentence c(w, s). Many methods of representing TF weights have been proposed; it is possible to use a logarithmic function that grows significantly slower than the above-mentioned linear function representing the frequency of words occurrence.

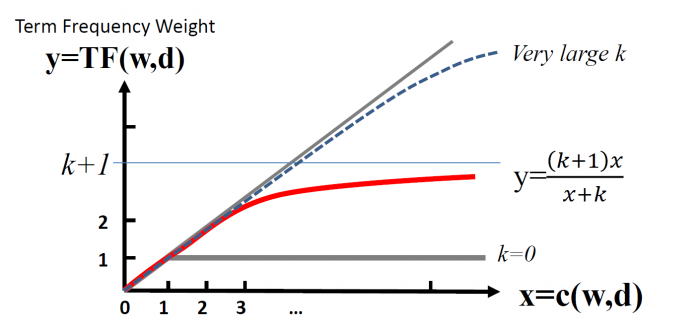

Unfortunately, the logarithmic function, just like the linear one, does not guarantee the possibility of bounding the functions above, so they approach infinity along with the increase of frequency of occurrence of words. This problem begins to be significant when a very large group of words occur in a document and take over the majority of the vector’s weight.

Such words include the above-mentioned “stop words” in particular. This phenomenon causes discrimination of rare words that may be of particular importance in the analyzed document. To avoid this problem, it is possible to apply BM25 transformation with TF weights restricted by upper limit for y = (k+1). However, if the value of k is very large, the above transformation will make the function being similar to a linear function.

- IDF weight (Inverse Document Frequency) represents the power of texts discrimination by a given word. This means that if there are words in the text that are occurring frequently in other texts as well, it may mean that these words do not have significant impact on the analysed sentence. These words include, among others, "stop words".

- TF-IDF is a weight reflecting the product of the frequency of words occurrence and the power of discrimination of a given word within a set of texts. This measure distinguishes especially the rare words within the whole set of documents, which at the same time are frequent words within the analysed document.

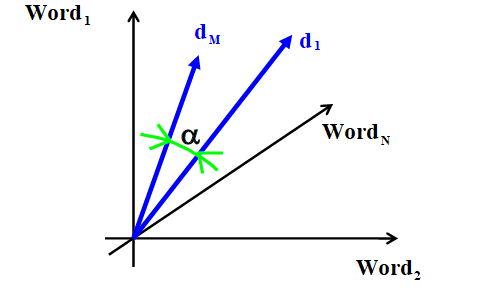

Having the appropriate vectors of words generated, representing the analyzed documents respectively, it is easy to imagine that every such document is characterized by a point in a multi-dimensional space.

In the competition we participated, the most important task was to compare two questions and determine whether they are unique or duplicates.

Therefore, it was necessary to identify a similarity function, which could describe the degree of similarity between the two vectors. In the case of one- or two-dimensional space, it is natural to apply the Euclidean metric. Unfortunately, when dealing with text analysis, each vector is represented by several dozen to several thousands of elements.

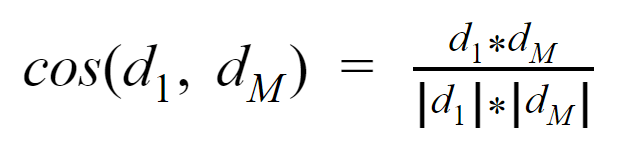

One of the measures we used in our solution was the cosine measure, which is based on the scalar product of two vectors and calculates the angle between them. If the angle equals 0, then the analyzed vectors will be equal, and the cosine measure reaches the highest value of 1.

Below are the examples of several pairs of questions from the training set that we used in the competition to build a classifier:

|

Question1 |

Question2 |

|

How do I become a data scientist in the data scientist team? |

How can I become a data scientist? |

|

What are the questions should not ask on Quora? |

Which question should I ask on Quora? |

|

What are the important algorithms in computer science? |

What is the importance of algorithm in computer science? |

|

Can I call you tonight? |

Please, could you call me? PLEASE! |

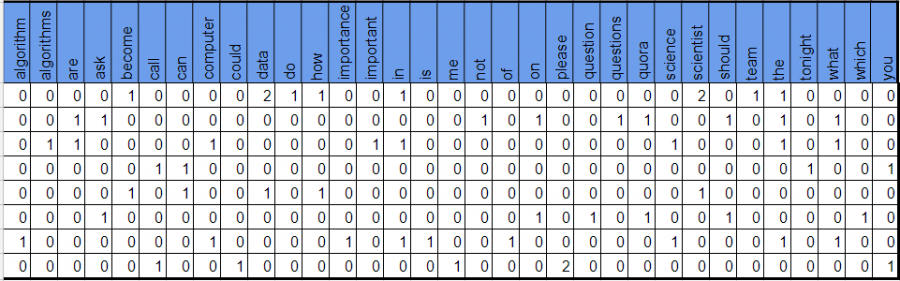

The Kaggle competition that we participated in allowed us to use several training pairs of questions. One of our approaches to the lexical analysis of the above statements was to build one large set of questions.

As a result, we were able to determine a list of unique vector elements. For the above example of 4 pairs of questions, by removing stop-words, we obtained 33 unique elements that were used to describe the individual questions. Below you will find fragments of codes that generate the word frequency array (TF), occurrence frequency of given words within the set of documents, and TF-IDF array.

from sklearn.feature_extraction.text import CountVectorizer, TfidfVectorizer import pandas as pd import numpy as np df = pd.DataFrame({ 'q1' : ['How do I become a data scientist in the data scientist team', 'What are the questions should not ask on Quora?', 'What are the important algorithms in computer science', 'Can I call you tonight'], 'q2' : ['How can I become a data scientist', 'Which question should I ask on Quora', 'What is the importance of algorithm in computer science?', 'Please, could you call me? PLEASE'] }) np_list = np.concatenate((df.q1, df.q2))

Generating a TF word frequency array, based on the word count of a given question:

vect = CountVectorizer() tf = pd.DataFrame(vect.fit_transform(np_list).toarray(), columns=vect.get_feature_names())

Generating a DF vector that represents the instances of individual words within all the questions in the set. TF functionality for bit maps was applied here:

vect = CountVectorizer(binary=True) df = vect.fit_transform(np_list).toarray().sum(axis=0) df_result = pd.DataFrame(df.reshape(1, len(vect.get_feature_names())), columns=vect.get_feature_names())

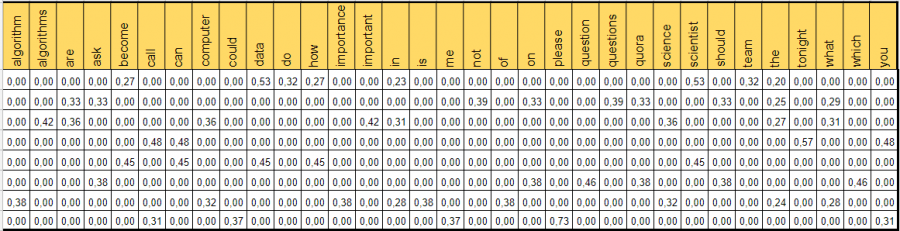

The last table shows the use of the second vectorizer that calculates the TF-IDF weight:

vect = TfidfVectorizer() tfidf = pd.DataFrame(vect.fit_transform(np_list).toarray(), columns=vect.get_feature_names())

The above-mentioned operations calculate the basic vector models for tested sentences. In the final stage, we wanted to compare question vectors for individual pairs. In this case, we used TF-IDF vectors, and the cosine measure described in the following paragraph was used as the measure of similarity:

from scipy import spatial df_1 = tfidf.iloc[:4, :] df_2 = tfidf.iloc[4:, :] result = [] for i in range(4): result.append(1-spatial.distance.cosine(np.array(df_1.iloc[i,:]), np.array(df_2.iloc[i,:])))

|

Question1 |

Question2 |

TF-IDF cosine similarity |

|

How do I become a data scientist in the data scientist team? |

How can I become a data scientist? |

0.712959832 |

|

What are the questions should not ask on Quora? |

Which question should I ask on Quora? |

0.504939411 |

|

What are the important algorithms in computer science? |

What is the importance of algorithm in computer science? |

0.462370678 |

|

Can I call you tonight? |

Please, could you call me? PLEASE! |

0.2928629354 |

Performing the correct vectorization and then extracting similarities between the selected pairs of questions on the basis of the received vectors was of great importance in the process of building the competition classifier. An important stage was appropriate pre-processing, which was the initial phase in our workflow. It was at this stage where we had a big influence on the unique “keywords” of every vector.

The following actions had the greatest impact on the final results of the competition:

- - using pre-processed data in the vectorization phase and calculation of similarities between the respective pairs of questions

- Application of various lexical levels within elements of the vector

- Using a mechanism for detecting synonyms

- Expanding the training set

- Data cleaning

It is worth noting that the issue of vectorization puts heavy burden on memory and time of calculations. Therefore, it is worth to keep in mind the scope of individual unique elements (number of columns), the types of data stored in the array (whether it is necessary to use numpy.float64 or numpy.int64). Every enhancement can produce significant results at the moment of calculating individual arrays.

- On 13/09/2017

0 Comments